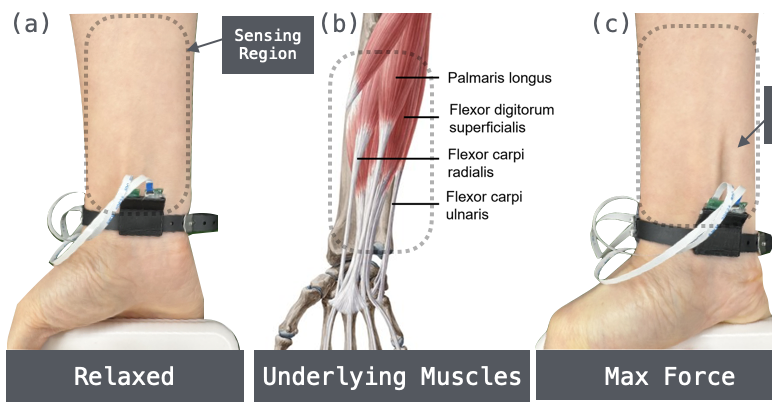

EchoForce: Continuous Grip Force Estimation from Skin Deformation Using Active Acoustic Sensing on a Wristband

Kian Mahmoodi*, Yudong Xie*, Tan Gemicioglu*, Chi-Jung Lee, Jiwan Kim, Cheng Zhang

Published in Proceedings of the 2025 ACM International Symposium on Wearable Computers, 2025

Abstract

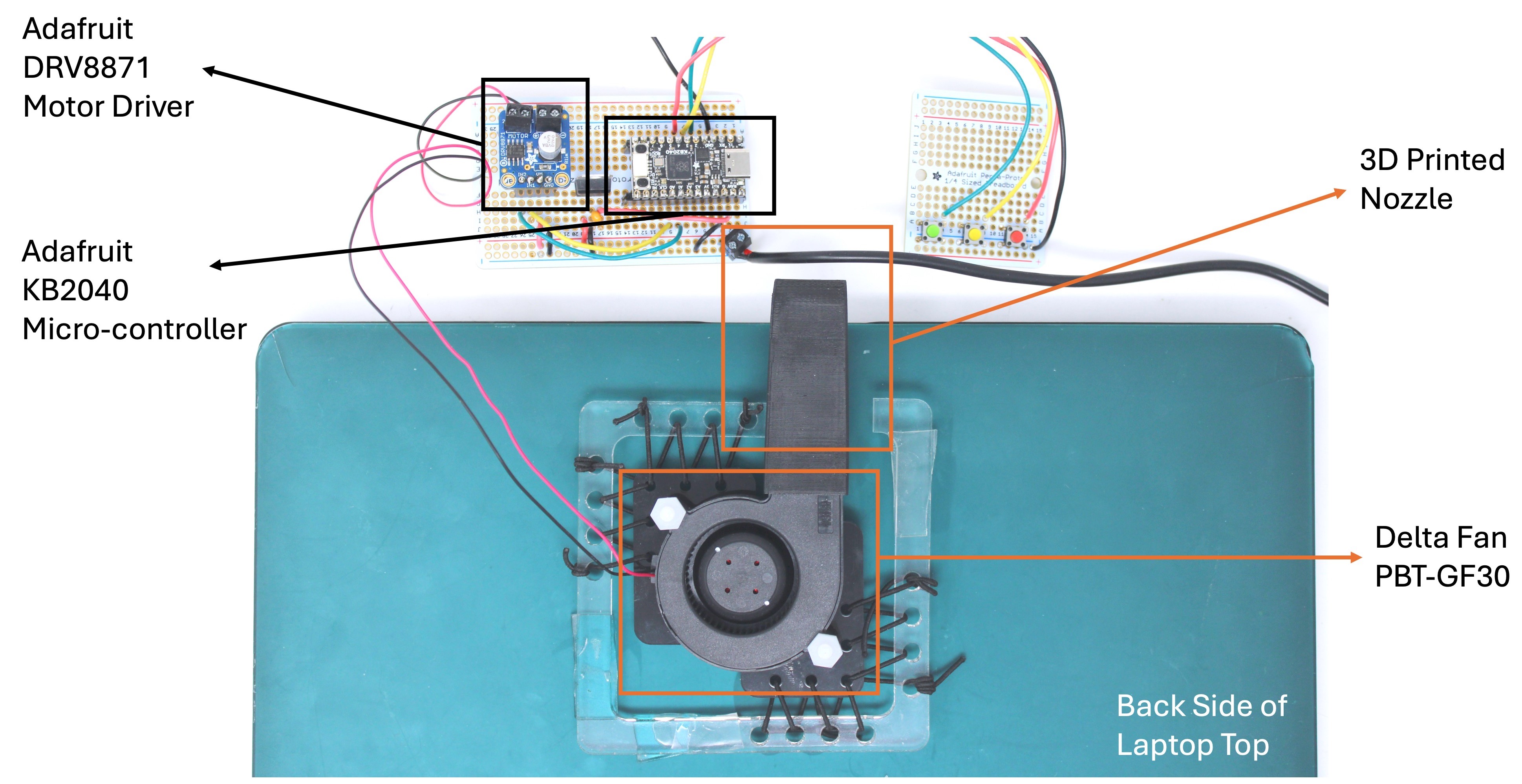

Grip force is commonly used as an overall health indicator in older adults and is valuable for tracking progress in physical training and rehabilitation. Existing methods for wearable grip force measurement are cumbersome and user-dependent, making them insufficient for practical, continuous grip force measurement. We introduce EchoForce, a novel wristband using acoustic sensing for low-cost, non-contact measurement of grip force. EchoForce captures acoustic signals reflected from subtle skin deformations by flexor muscles on the forearm. In a user study with 11 participants, EchoForce achieved a fine-tuned user-dependent mean error rate of 9.08% and a user-independent mean error rate of 12.3% using a foundation model. Our system remained accurate between sessions, hand orientations, and users, overcoming a significant limitation of past force sensing systems. EchoForce makes continuous grip force measurement practical, providing an effective tool for health monitoring and novel interaction techniques.