Page Not Found

Page not found. Your pixels are in another canvas.

A list of all the posts and pages found on the site. For you robots out there is an XML version available for digesting as well.

Page not found. Your pixels are in another canvas.

Published:

I originally wrote this for a past iteration of CS3001: Computing and Society at Georgia Tech. I quite like it, and I think the issues are increasingly relevant, so I decided to put it up here.

Published:

Our full paper for SilentSpeller was accepted to CHI 2022!

Published:

Joined the BCI Society Postdoc and Student Committee to organize an open science initiative for BCI learning resources.

Published:

Our work on SilentSpeller was featured by BuzzFeed and other media outlets.

Published:

My position paper on Passive Haptic Rehearsal was accepted to the Intelligent Music Interfaces workshop at CHI 2022!

Published:

Earned the PURA Travel Award to support my travel to New Orleans for CHI 2022. Thank you UROP!

Published:

Presented on Passive Haptic Rehearsal at Georgia Tech’s Undergraduate Research Symposium and earned the 2nd place Oral Presentation Award!

Published:

Attended CHI 2022 in New Orleans. Excellent discussions and really enjoyed meeting folks at my first in-person HCI conference!

Published:

Started internship at Microsoft Research with the Audio and Acoustics group’s brain-computer interface team!

Published:

Finished my internship project on tongue gesture recognition and back to Georgia Tech. Look forward to sharing our findings at ISWC/UbiComp and beyond!

Published:

Our live demonstration of passive haptic learning earned the Best Demo Award at UbiComp 2022!

Published:

Excited to be a member of the newly-formed Futuring SIGCHI committee!

Published:

Graduated from my Bachelor’s in Computer Science with highest honors. Starting a Master’s degree as part of my BS/MS in January.

Published:

I’ll be presenting my work on combining tongue gestures with gaze tracking at CHI 2023 Interactivity in April. Look forward to seeing everyone in Hamburg.

Published:

Honored to receive the Sigma Xi Best Undergraduate Research Award and Donald V. Jackson Fellowship Award for my research involvement and leadership at Georgia Tech!

Published:

I’ll be starting my PhD at Cornell Tech in NYC in the Fall with a Cornell Fellowship. I’ll be advised by Dr. Tanzeem Choudhury and Dr. Cheng Zhang, researching closed-loop passive interventions and intent-driven interaction.

Published:

Moved to NYC and started my PhD! I’ve also started a new role as Postdoc and Student Committee Chair for the Brain-Computer Interface Society.

Published:

A very busy October coming up! I will be a student volunteer at UbiComp/ISWC 2023 in Cancun, while Mike Winters presents our paper on multimodal tongue gesture recognition at ICMI 2023. Afterward, I’ll be remotely assisting with our first SIGCHI Emerging Scholars workshop at CSCW 2023. Finally, David Martin will be in NYC to present our poster on fingerspelling with TapStrap at ASSETS 2023.

Published:

Attending UIST 2024 in Pittsburgh with some new demos to share. Also, my paper on accelerating piano learning via PHL was accepted to IMWUT for the December 2024 issue! Look forward to UbiComp 2025 for the presentation!

Published:

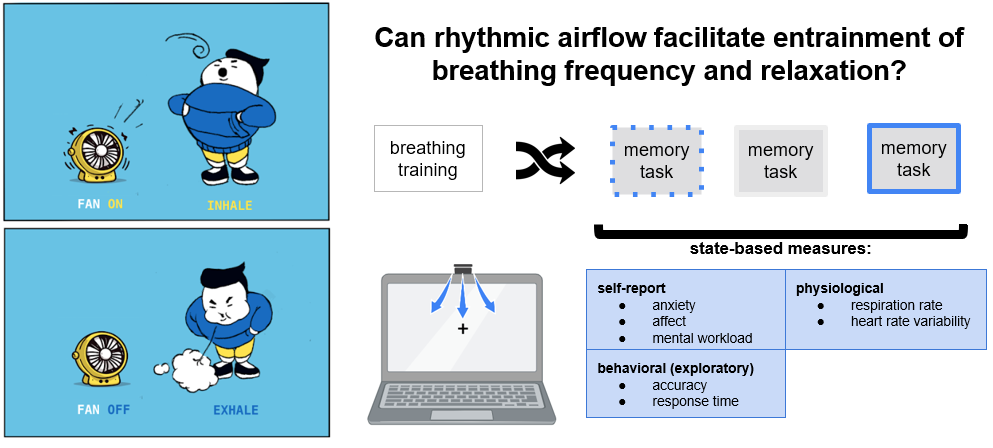

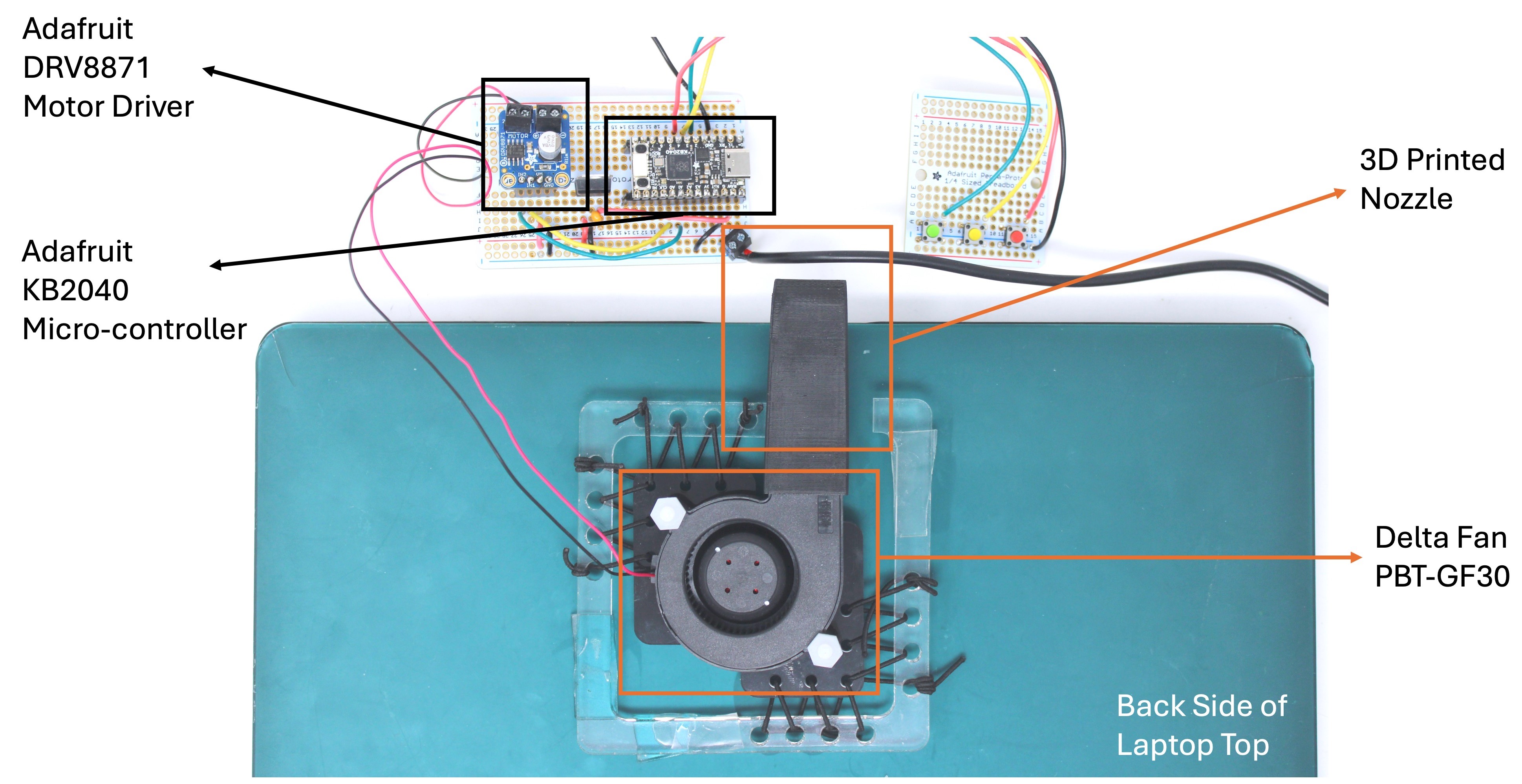

BreathePulse, the first airflow-based breathing guide, was also accepted to IMWUT for the December 2024 issue! My first project at Cornell and a wonderful collaboration with my labmates Thalia Viranda and Yiran Zhao.

Published:

Our review of the Master Classes for the 2023 BCI Meeting is accepted to the Journal of Neural Engineering’s Special Issue on Brain-Computer Interfaces! I will also be an Associate Chair for CHI 2025 Late-Breaking Work.

Published:

I am attending CHI 2025 in Yokohama! I’ll be presenting a draft of my research vision on autonomic interfaces at the Sensorimotor Devices Workshop. I am also bringing demos for passive haptic rehearsal, BreathePulse, and vagus nerve stimulation for appetite modulation. Come say hi!

Published:

Presented my early work on using transcutaneous vagus nerve stimulation as a momentary appetite intervention at InterfaceNeuro in Atlanta!

Published:

EchoForce, our project on continuous, user-independent grip force tracking via acoustic sensing is accepted to ISWC 2025!

Published:

I’m co-organizing a workshop on Everyday Perceptual and Physiological Augmentation at UIST 2025! Join us in exploring how human augmentation can be made practical through everyday devices!

Published:

Thanks to all who joined our workshop. Next, I’ll be in Finland for UbiComp 2025, where I’ll be presenting three projects: BreathePulse, Passive Haptic Rehearsal, and EchoForce.

Published:

As a small high-school team, we competed in the IEEE Robotics and Automation Society’s Humanitarian Robotics and Technologies Challenge by applying machine learning for autonomous mine detection with a metal detector on a low-cost robot platform. We earned 3rd place in the competition and demonstrated robot at ICRA 2017 as a finalist.

organizations competition, machine-learning, robotics code Finalist Award

Published:

I designed and applied a framework for training reinforcement learning models to control rapid-action mobile robots. My team became a finalist from among 100+ teams and earned 11th place at ICRA 2018 as the only high-school team to ever compete in the challenge.

organizations competition, machine-learning, robotics report Finalist Award

Published:

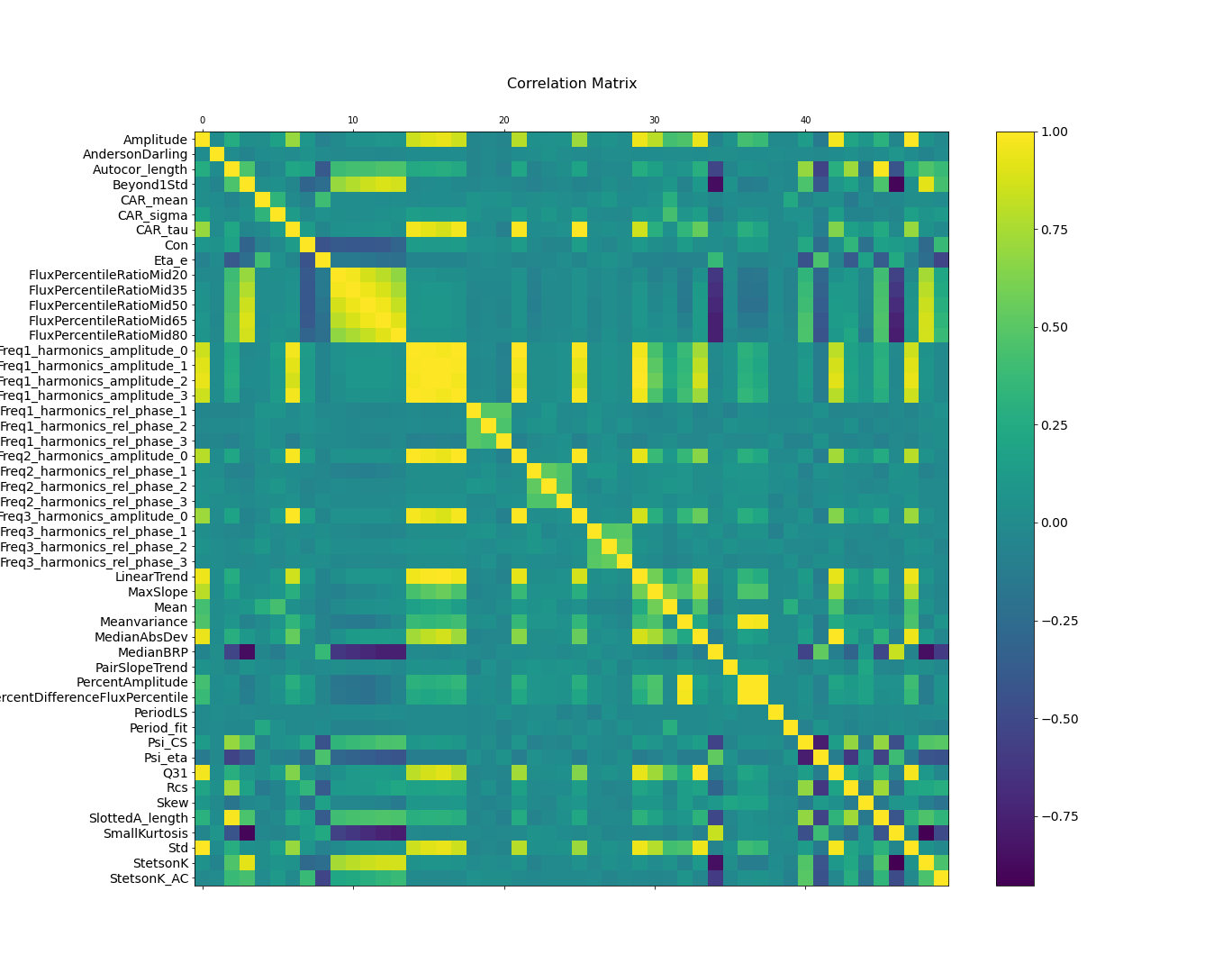

Traditionally, exoplanet discovery relies on expert astronomers or models that integrate astronomy domain knowledge. We applied machine learning and feature extraction techniques to get 80% accuracy for stellar light curves in NASA’s Mikulski Archive, demonstrating that domain knowledge is no longer necessary for discovery of new exoplanets.

Published:

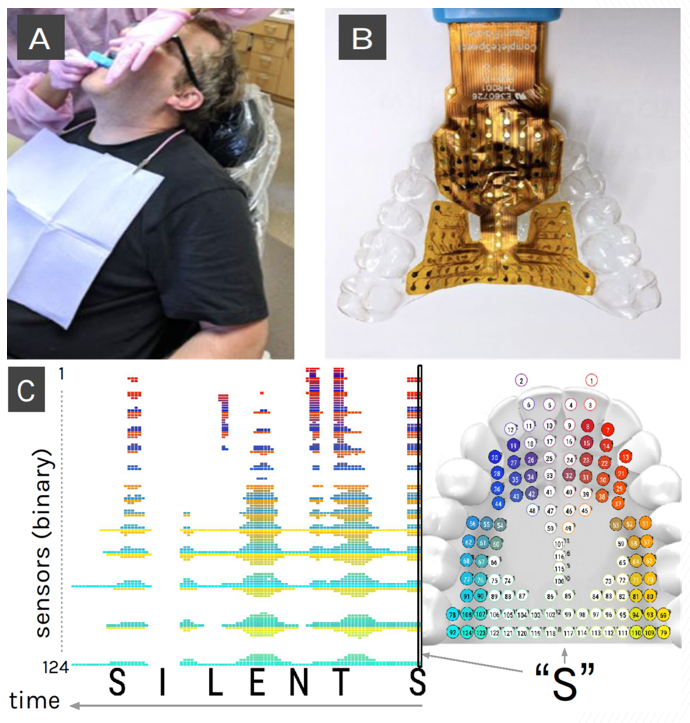

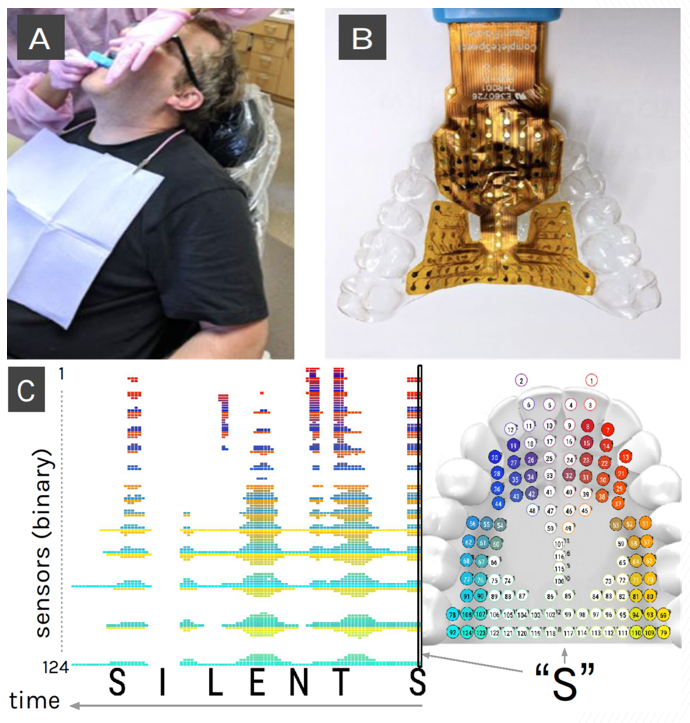

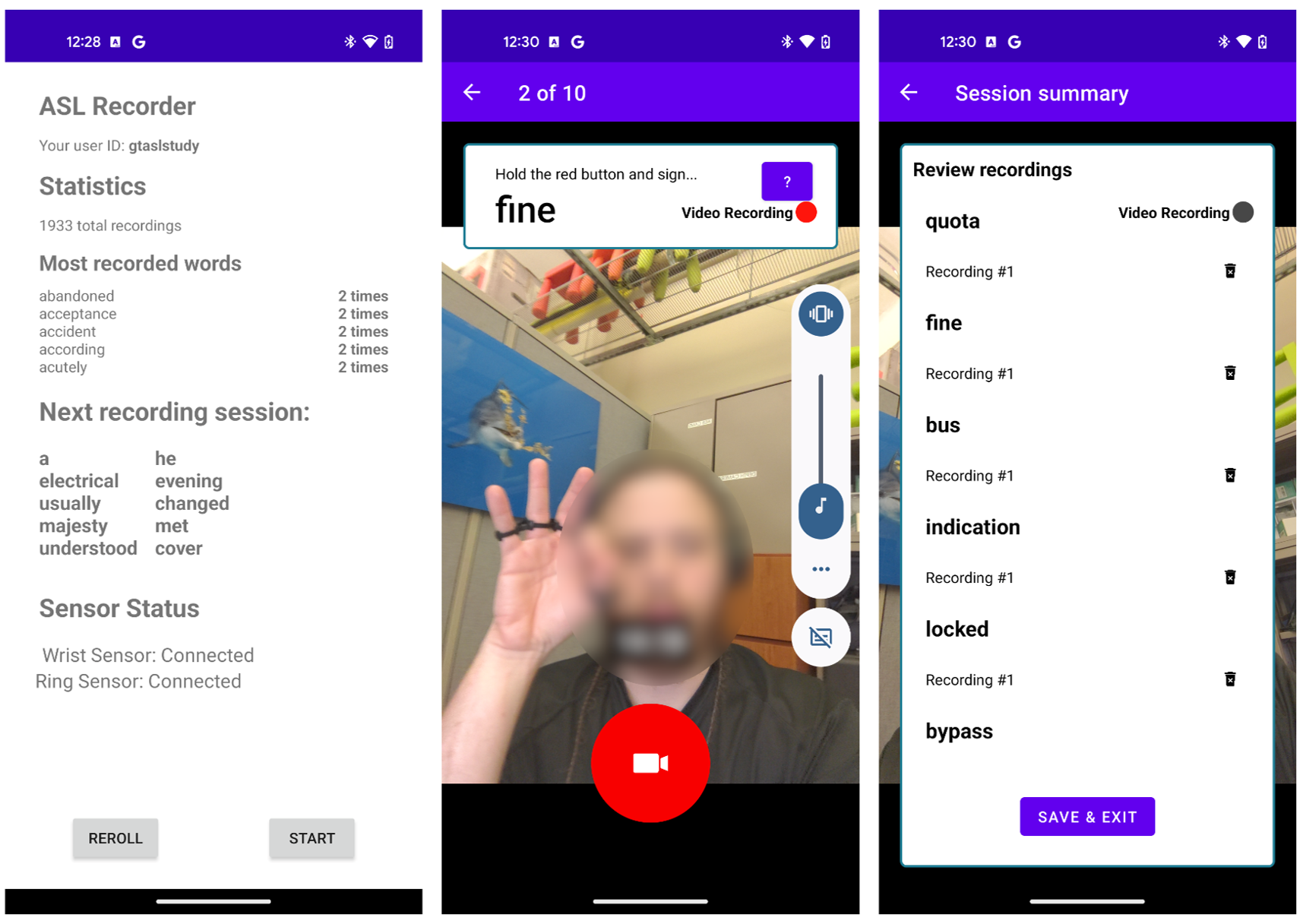

Silent speech systems provide a means for communication to people with movement disabilities like muscular dystrophy while preserving privacy. SilentSpeller is the first-ever silent speech system capable of use with a >1000 word vocabulary while in motion. We made a novel text entry system with capacitive tongue sensing from an oral wearable device to enable a privacy-preserving alternative to speech recognition.

research assistive-technology, subtle-interaction, wearables video CHI'22 paper CHI'21 Interactivity paper UROP Outstanding Oral Presentation Award BuzzFeed

Published:

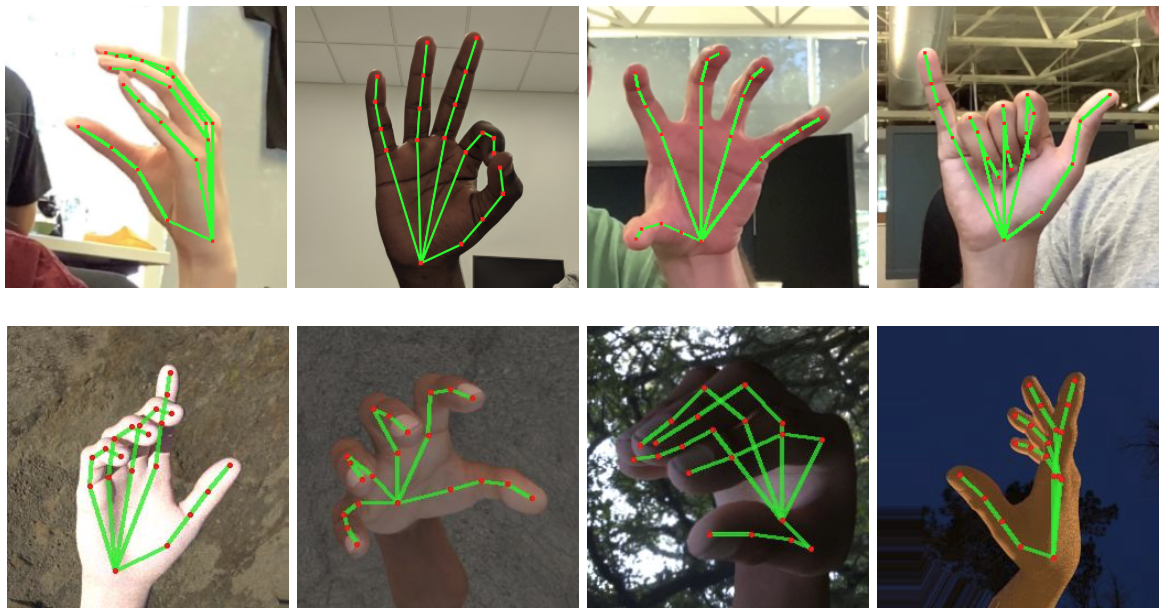

Estimating hand poses is valuable for gesture interactions and hand tracking but often requires expensive depth cameras. Stereo cameras show multiple perspectives of the hand, allowing depth perception. We created a pipeline for estimating location of hand and finger keypoints with a stereo camera using deep convolutional neural networks.

Published:

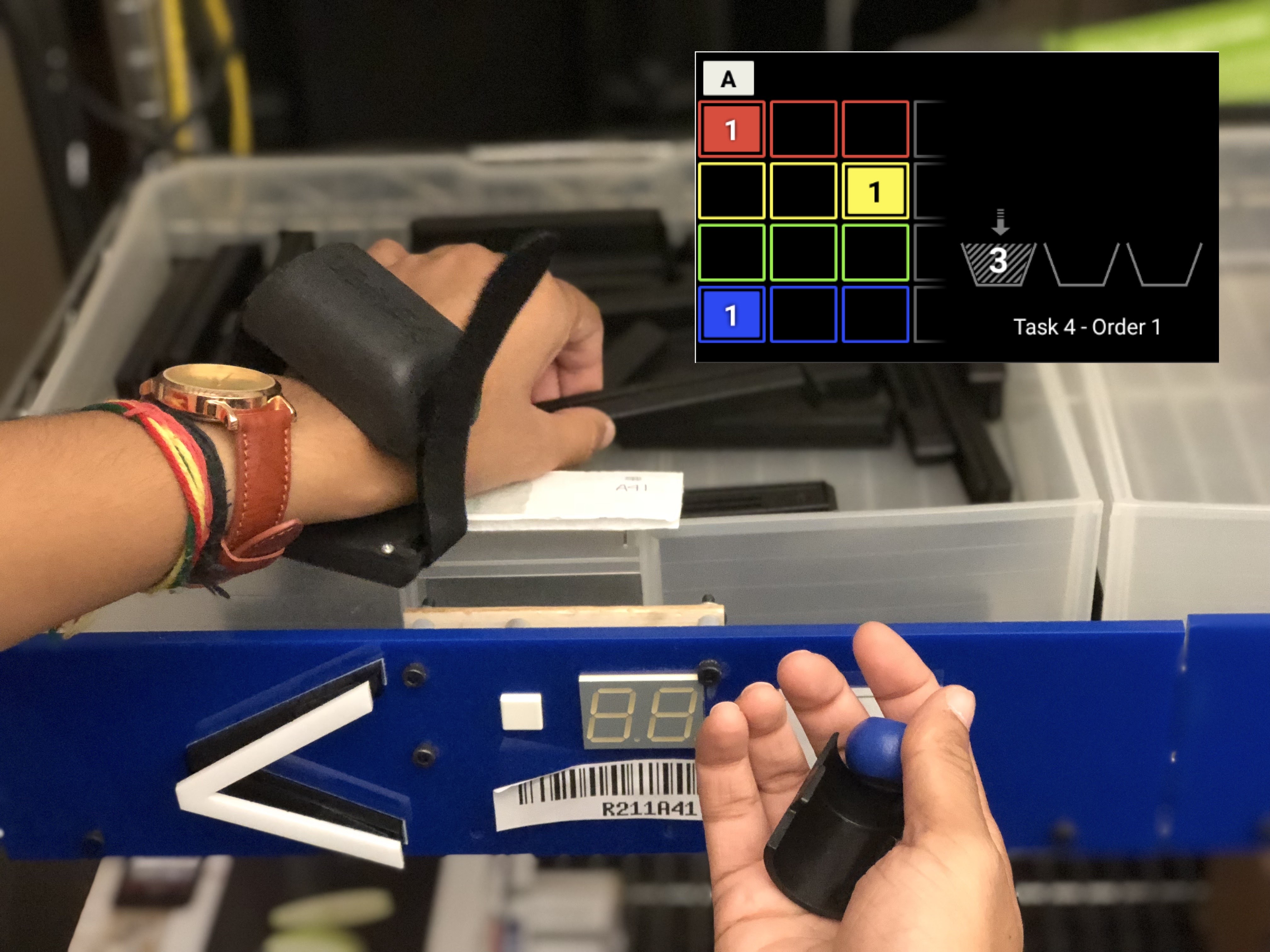

Traditionally, computer vision models are trained using large datasets gathered online. We investigated a new method for training unsupervised object recognition models using egocentric computer vision from head-worn displays. In particular, we aimed to classify objects for order picking in warehouses.

research computer-vision, head-worn-displays, machine-learning

Published:

We developed a new brain-computer interface using fNIRS to detect attempted motor movement in different regions of the body. Converting attempted motions to language to enable more versatile communication options for people with movement disabilities. For my undergraduate thesis, I explored how transitional gestures may enable higher accuracy and information transfer with brain-computer interfaces.

research assistive-technology, brain-computer-interfaces, fnirs undergraduate thesis President's Undergraduate Research Award

Published:

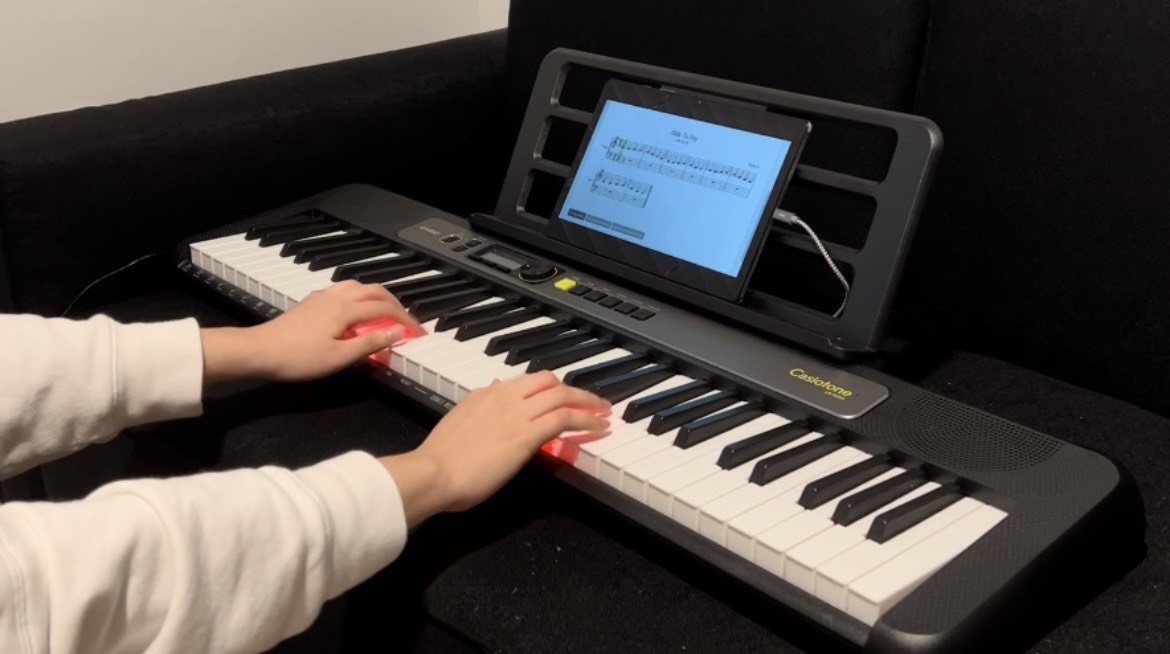

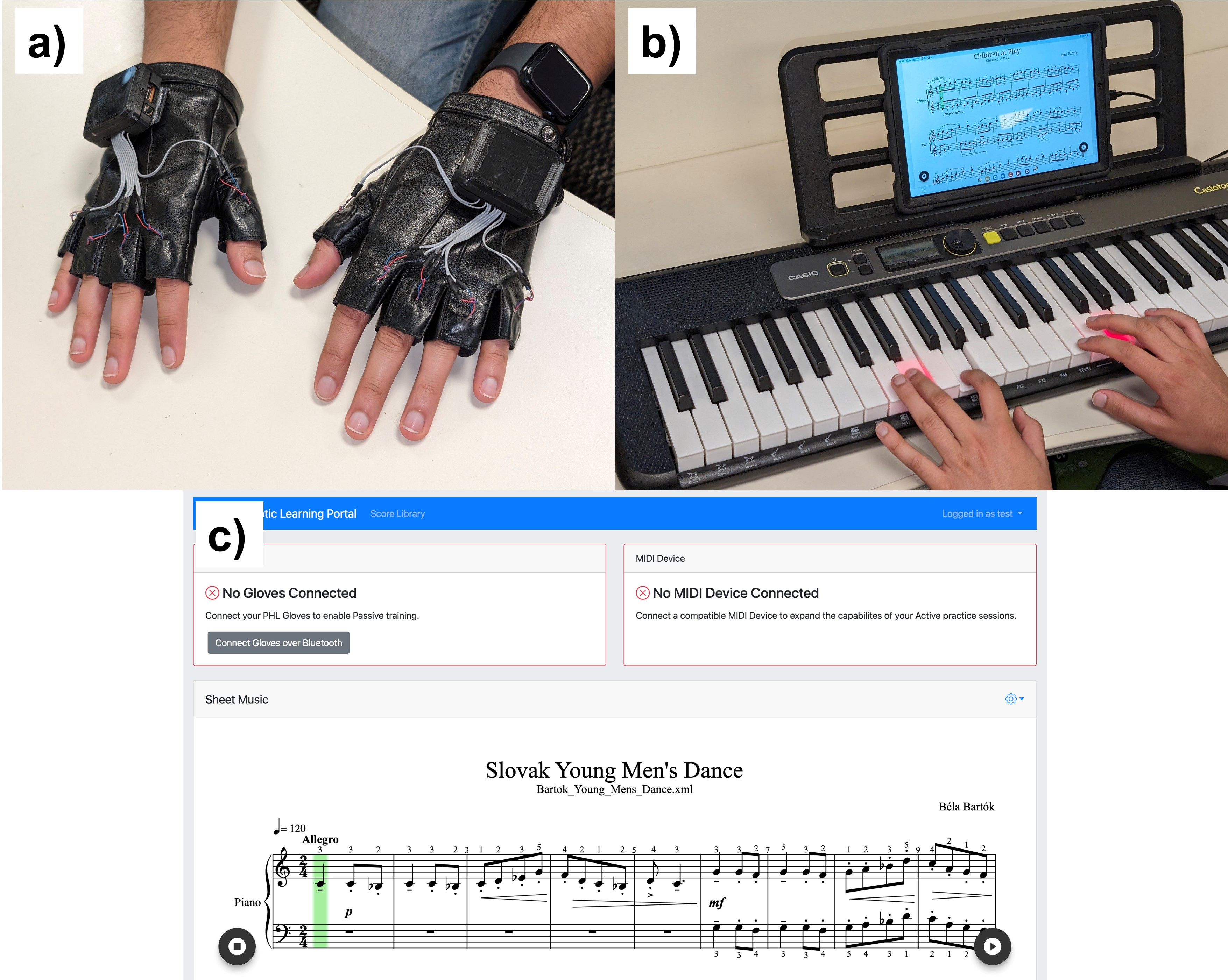

Learning piano is difficult, especially for older learners with busy lives. Passive haptic learning can reduce time spent practicing piano through instructional tactile cues. We designed a custom vibrotactile haptic glove for daily wear, enabling faster learning of piano skills. I led a group of undergraduate and graduate students in manufacturing glove hardware, designing a web portal and organizing user studies to evaluate performance.

research haptics, music, wearables video IMWUT'24 paper UbiComp'22 demo CHI'22 IMI abstract UbiComp'22 Best Demo Award UROP Outstanding Oral Presentation Award

Published:

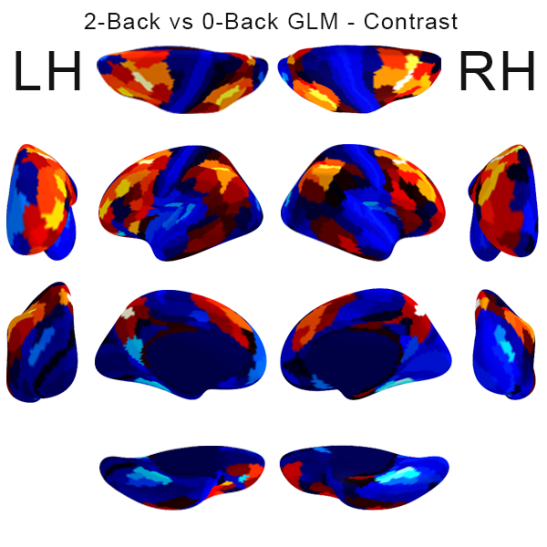

As part of Neuromatch Academy student collaboration, we investigated working memory activity through N-back tasks with different sequence lengths and stimuli based on the Human Connectome Project’s task-based fMRI data. We localized and characterized prominent regions of activation using GLMs.

Published:

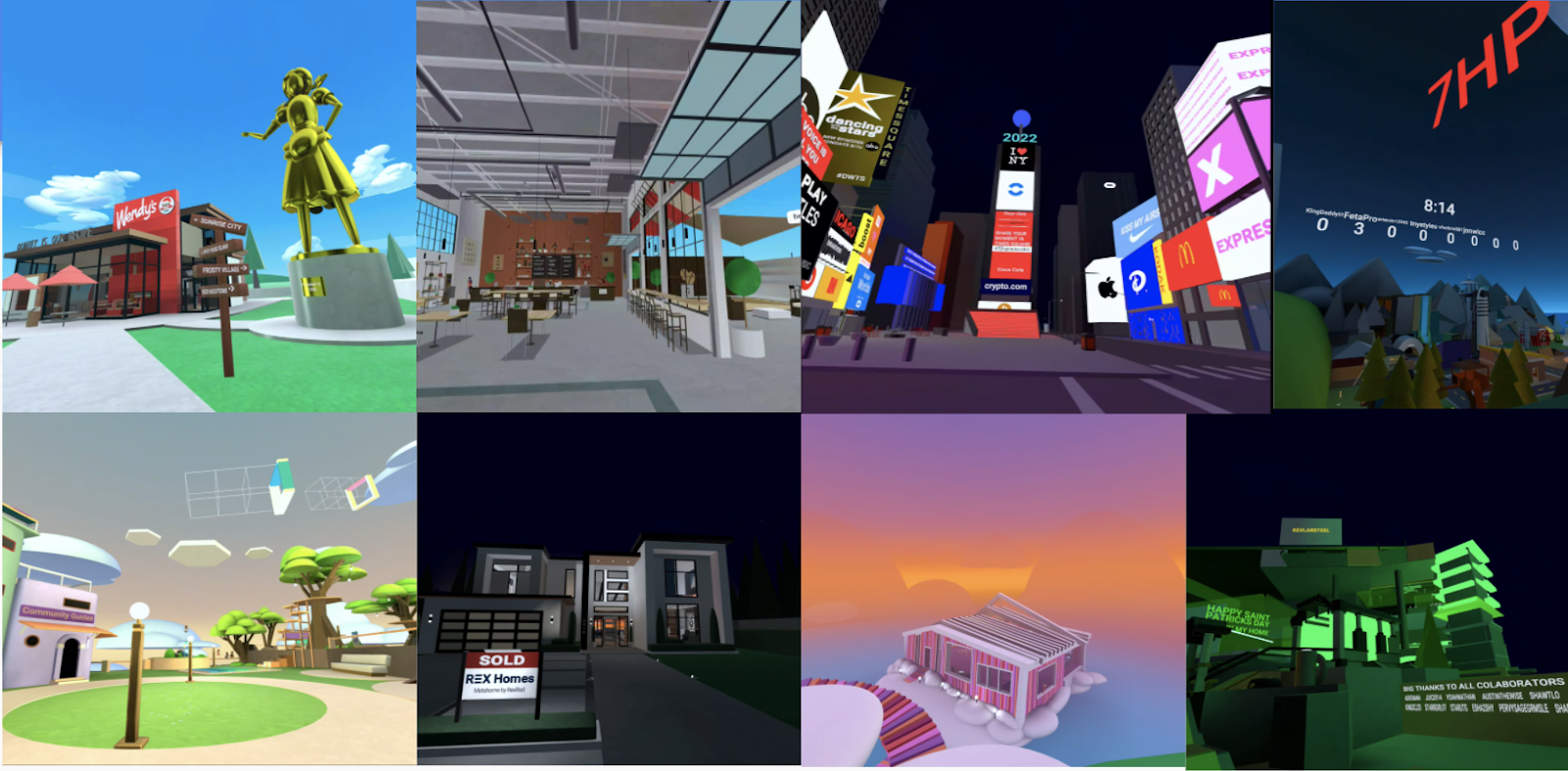

Despite recent attention, Horizon Worlds hasn’t been studied extensively as a social VR platform. Using ethnographic methods, my group studied the online VR community in Horizon Worlds. Based on observational reports and interviews, we found the creative community of world designers to be a prototypical community of practice for designing social VR experiences.

Published:

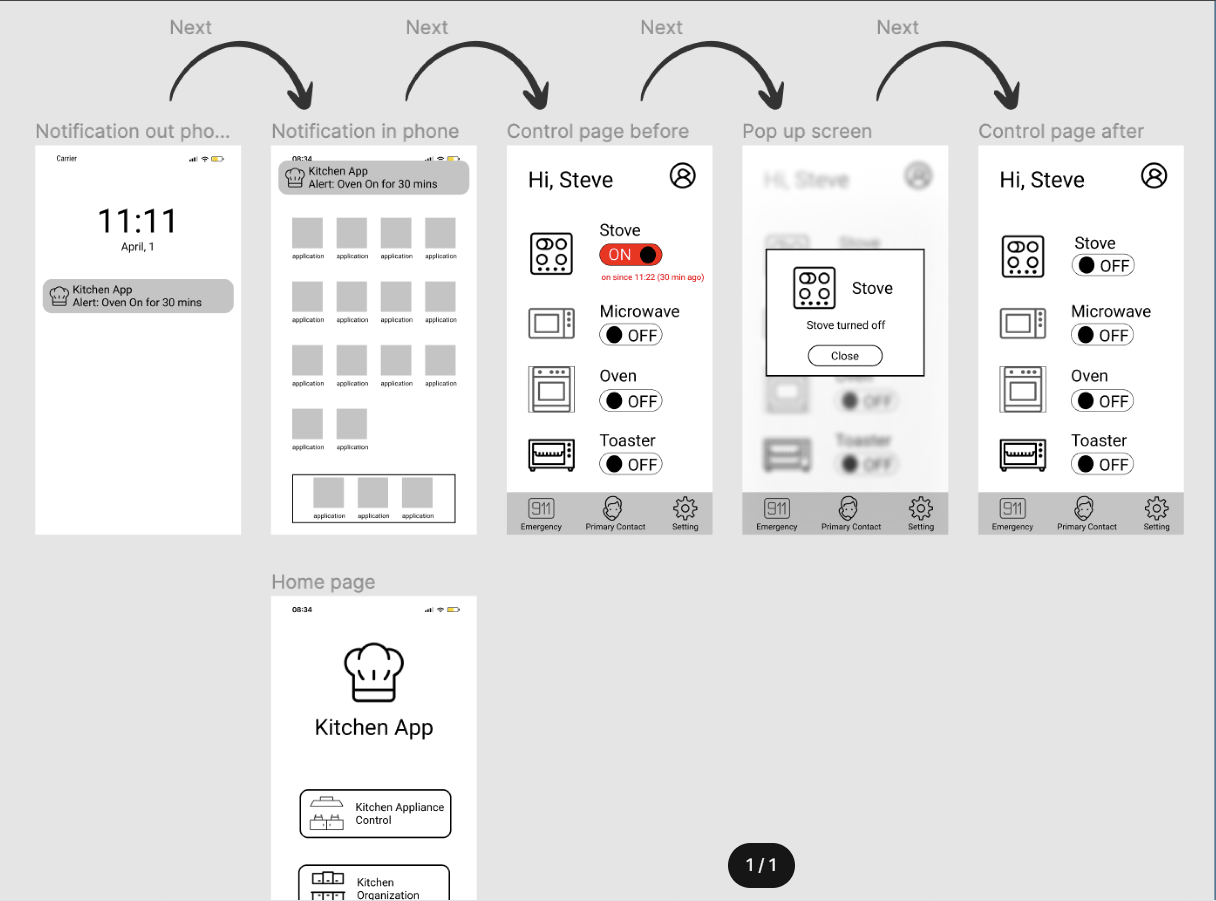

For elderly people with cognitive impairments, the kitchen can be dangerous. To reduce risks of burns, falling objects and memory lapses in the kitchen, we prototyped an intelligent stovetop appliance and mobile app interface. We conducted a Wizard of Oz study on prototype and collected usability information from interviews, performance, and NASA-TLX.

coursework assistive-technology, ubiquitous-computing slides report

Published:

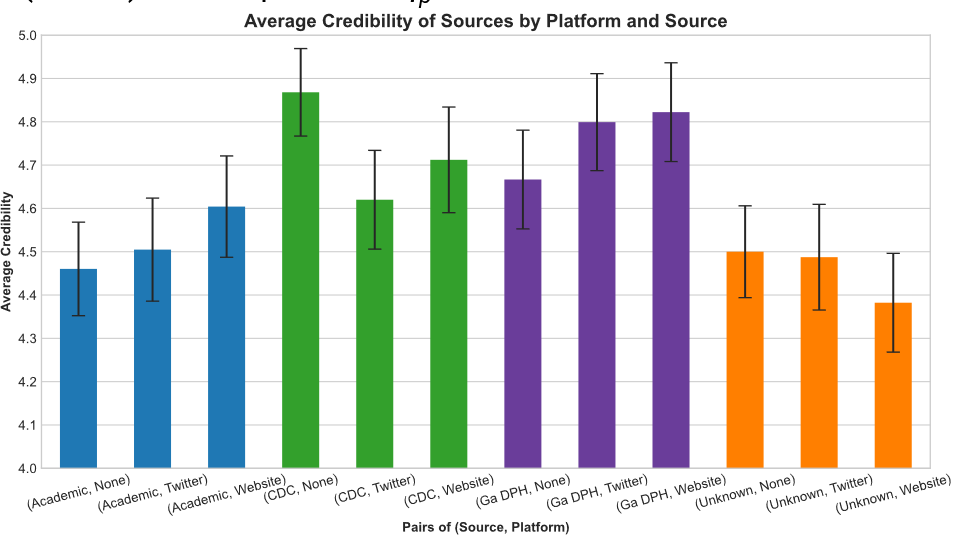

With the COVID-19 pandemic, online delivery of public health messages has become a critical role for public healthcare. We examined how credibility of public health messages regarding COVID-19 varies across different platforms (Twitter, original website) and source (CDC, Georgia Department of Health, independent academics) in a controlled experiment.

Published:

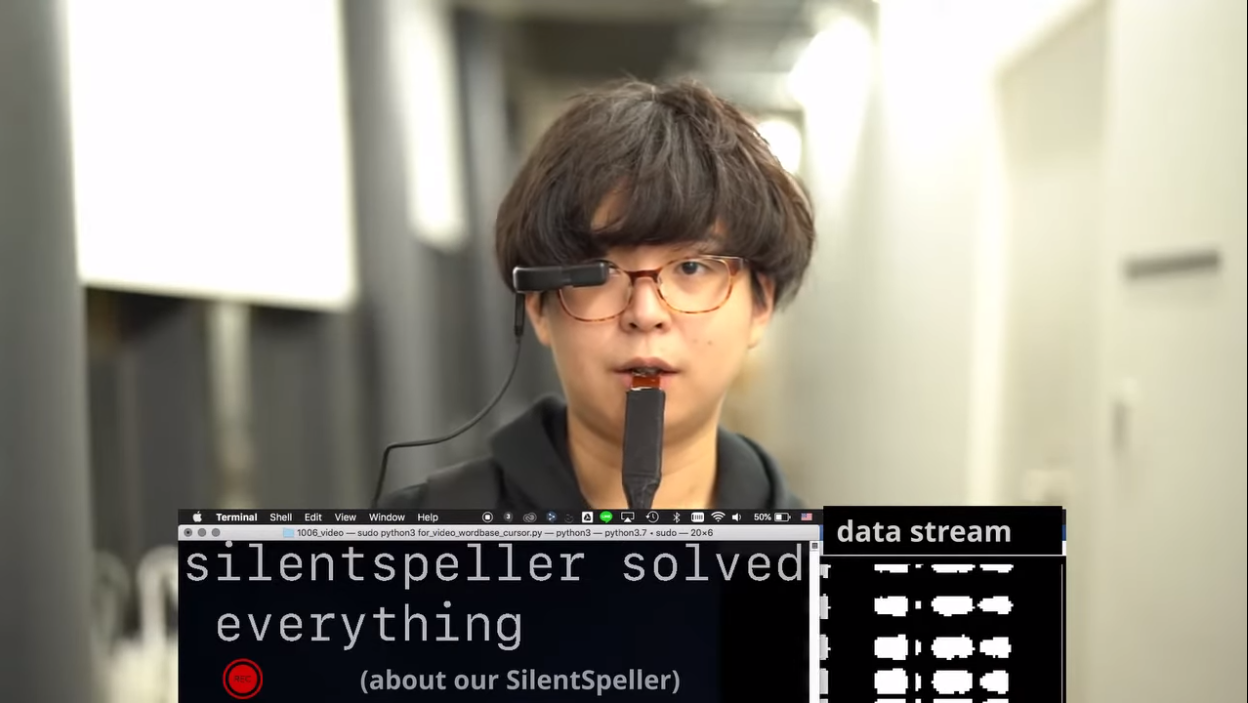

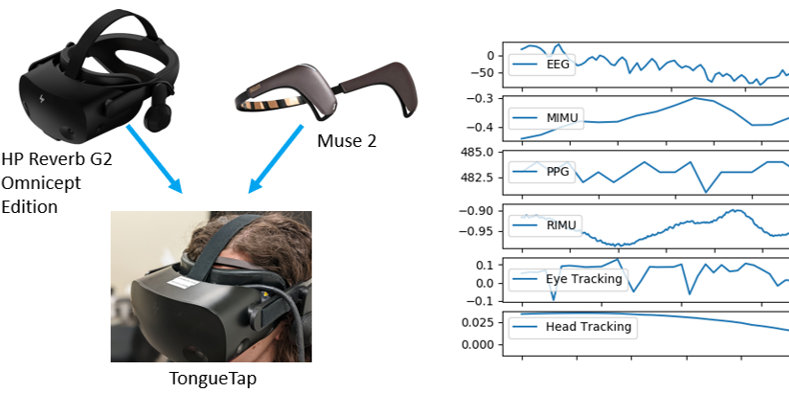

Tongue gestures are an accessible and subtle method for interacting with wearables but past studies have used custom hardware with a single sensing modality. At Microsoft Research, we used multimodal sensors in a commercial VR headset and EEG headband to build a 50,000 gesture dataset and real-time classifier. We also invented a new interaction method combining tongue and gaze to enable faster gaze-based selection in hands-free interactions.

research head-worn-displays, sensing, subtle-interaction ICMI'24 paper CHI'23 demo UbiComp'22 poster talk

Published:

The field of brain-computer interfaces (BCIs) is growing rapidly, but there’s a lack of reliable learning resources for students and new researchers. As part of my role in the Postdoc & Student Committee of the BCI Society, I worked to create tutorial sessions for teaching various topics in BCI at the BCI Meeting.

Published:

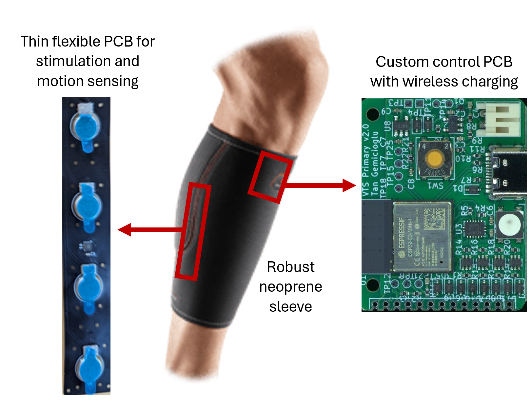

Designed flexible electronics for low-cost haptic feedback device to rehabilitate post-stroke spasticity. With a low-cost, easy-to-use, take-home wearable technology, Touché is able to relieve the intolerable muscle contractions common after stroke and support activities of daily living.

Published:

Whereas past guided breathing systems use visual or tactile feedback, we designed BreathePulse, an airflow-based system for reducing respiratory rate more naturally and unobtrusively. We evaluated BreathePulse in an intensive n-back task and provided guidelines for making future guided breathing devices more effortless and comfortable for users.

research entrainment, respiration, stress, ubiquitous-computing IMWUT'24 paper

Published:

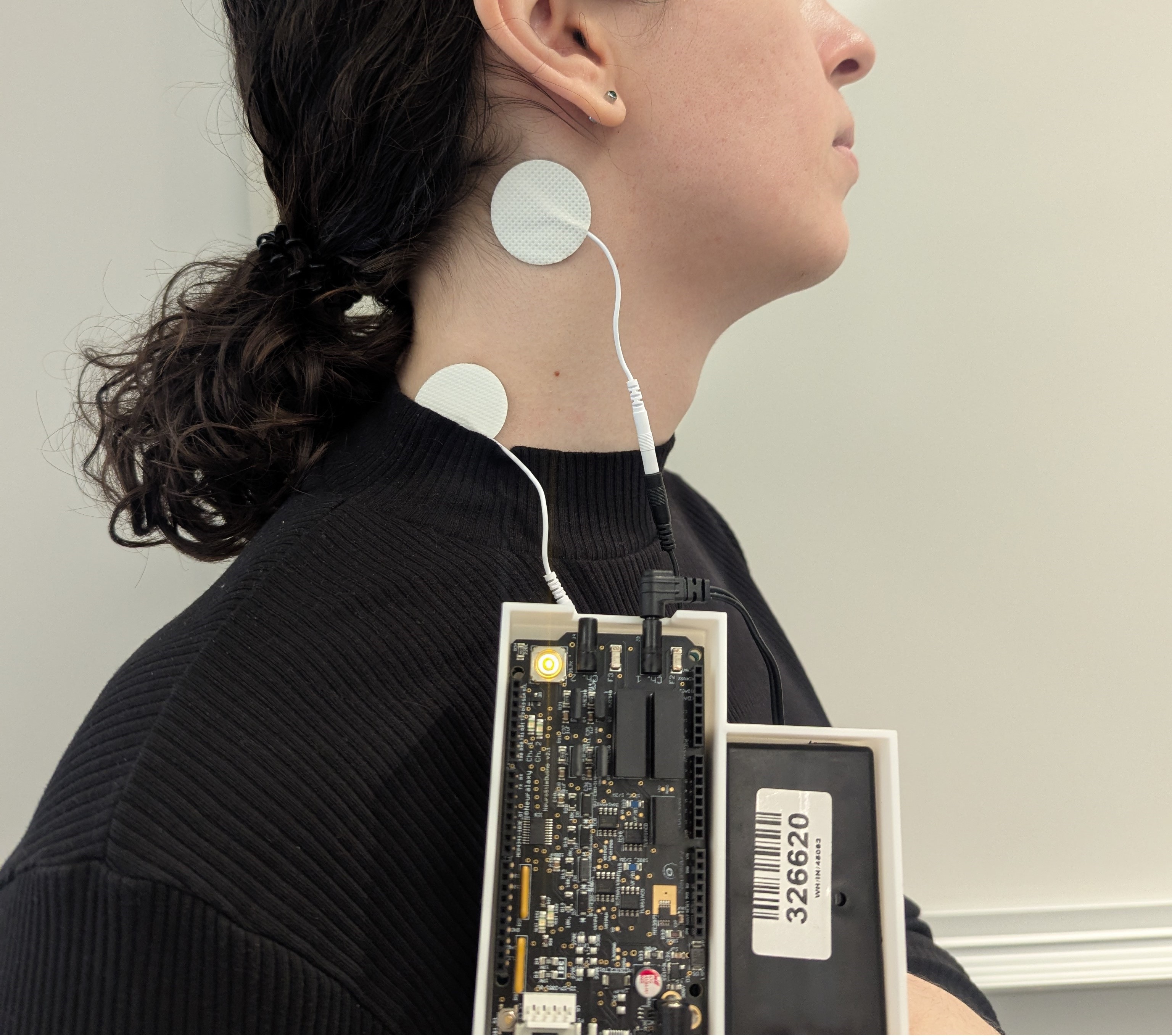

We propose momentary, non-invasive vagus nerve stimulation as a method for modulating satiety while people are eating. We believe that this approach can be effective to reduce emotional eating behaviors during daily life.

research eating, neural-interfaces, wearables InterfaceNeuro'25 poster

Published:

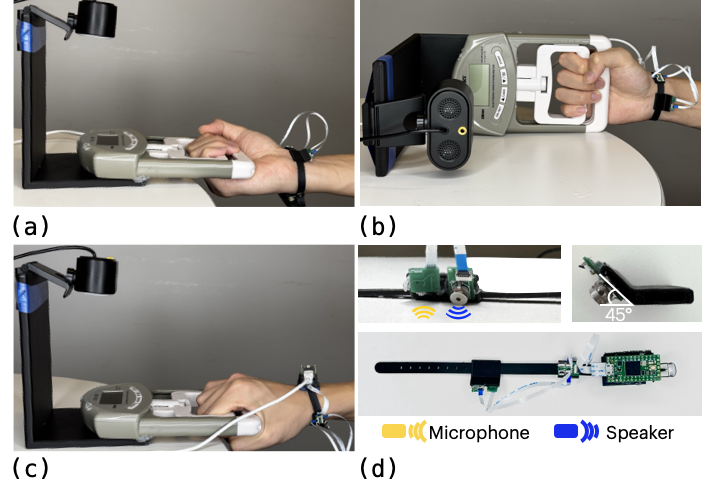

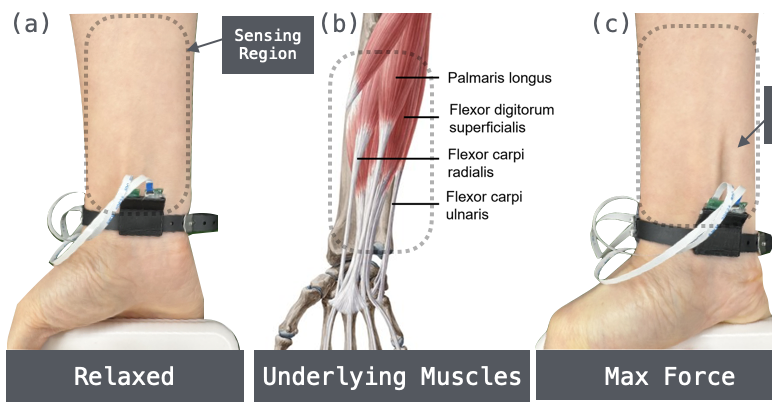

EchoForce is a wristband using a novel application of acoustic sensing to continuously measure grip force via subtle skin deformations using only a speaker and microphone. EchoForce is inexpensive, does not need calibration, and can be worn and taken off quickly, making it highly practical for wearable grip force measurement in health monitoring and HCI.

Published:

We are conducting a dual-phase clinical trial on the feasibility of using a wearable vibrotactile stimulation sleeve for post-stroke rehabilitation of spasticity in the leg and foot. In particular, we are investigating the neurophysiological mechanism of vibrotactile stimulation in rehabilitation, and its effect when used in conjunction with gait training.

Naoki Kimura, Tan Gemicioglu, Jonathan Womack, Richard Li, Yuhui Zhao, Abdelkareem Bedri, Alex Olwal, Jun Rekimoto, Thad Starner

Published in Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, 2021

Voice control provides hands-free access to computing, but there are many situations where audible speech is not appropriate. Most unvoiced speech text entry systems can not be used while on-the-go due to movement artifacts. SilentSpeller enables mobile silent texting using a dental retainer with capacitive touch sensors to track tongue movement. Users type by spelling words without voicing. In offline isolated word testing on a 1164-word dictionary, SilentSpeller achieves an average 97% character accuracy. 97% offline accuracy is also achieved on phrases recorded while walking or seated. Live text entry achieves up to 53 words per minute and 90% accuracy, which is competitive with expert text entry on mini-QWERTY keyboards without encumbering the hands.

Tan Gemicioglu, Noah Teuscher, Brahmi Dwivedi, Soobin Park, Emerson Miller, Celeste Mason, Caitlyn Seim, Thad Starner

Published in Intelligent Music Interfaces Workshop at the 2022 CHI Conference on Human Factors in Computing Systems, 2022

Passive haptic learning (PHL) uses vibrotactile stimulation to train piano songs using repetition, even when the recipient of stimulation is focused on other tasks. However, many of the benefits of playing piano cannot be acquired without actively playing the instrument. In this position paper, we posit that passive haptic rehearsal, where active piano practice is assisted by separate sessions of passive stimulation, is of greater everyday use than solely PHL. We propose a study to examine the effects of passive haptic rehearsal for self-paced piano learners and consider how to incorporate passive rehearsal into everyday practice.

Naoki Kimura, Tan Gemicioglu, Jonathan Womack, Richard Li, Yuhui Zhao, Abdelkareem Bedri, Zixiong Su, Alex Olwal, Jun Rekimoto, Thad Starner

Published in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, 2022

Speech is inappropriate in many situations, limiting when voice control can be used. Most unvoiced speech text entry systems can not be used while on-the-go due to movement artifacts. Using a dental retainer with capacitive touch sensors, SilentSpeller tracks tongue movement, enabling users to type by spelling words without voicing. SilentSpeller achieves an average 97% character accuracy in offline isolated word testing on a 1164-word dictionary. Walking has little effect on accuracy; average offline character accuracy was roughly equivalent on 107 phrases entered while walking (97.5%) or seated (96.5%). To demonstrate extensibility, the system was tested on 100 unseen words, leading to an average 94% accuracy. Live text entry speeds for seven participants averaged 37 words per minute at 87% accuracy. Comparing silent spelling to current practice suggests that SilentSpeller may be a viable alternative for silent mobile text entry.

Asha Bhandarkar, Tan Gemicioglu, Brahmi Dwivedi, Caitlyn Seim, Thad Starner

Published in Proceedings of the 2022 ACM International Joint Conference on Pervasive and Ubiquitous Computing, 2022

Passive haptic learning (PHL) is a method for learning piano pieces through repetition of haptic stimuli while a user is focused on other daily tasks. In combination with active practice techniques, this method is a powerful tool for users to learn piano pieces with less time spent in active practice while also reducing cognitive effort and increasing retention. We propose a demo combining these two learning methods, in which attendees will engage in a short active practice session followed by a passive practice session using vibrotactile haptic gloves. Attendees will be able to experience the effects of passive haptic learning for themselves as well as gauge their mastery of the piece with a final performance at the end of the demo.

demo haptics, learning, piano doi paper video Best Demo Award

Tan Gemicioglu, Mike Winters, Yu-Te Wang, Ivan Tashev

Published in Proceedings of the 2022 ACM International Joint Conference on Pervasive and Ubiquitous Computing, 2022

Head worn displays are often used in situations where users’ hands may be occupied or otherwise unusable due to permanent or situational movement impairments. Hands-free interaction methods like voice recognition and gaze tracking allow accessible interaction with reduced limitations for user ability and environment. Tongue gestures offer an alternative method of private, hands-free and accessible interaction. However, past tongue gesture interfaces come in intrusive or otherwise inconvenient form factors preventing their implementation in head worn displays. We present a multimodal tongue gesture interface using existing commercial headsets and sensors only located in the upper face. We consider design factors for choosing robust and usable tongue gestures, introduce eight gestures based on the criteria and discuss early work towards tongue gesture recognition with the system.

poster gesture, sensing, subtle-interaction doi paper poster

Tan Gemicioglu, Yuhui Zhao, Melody Jackson, Thad Starner

Published in Proceedings of the 10th International Brain-Computer Interface Meeting, 2023

BCIs using imagined or executed movement enable subjects to communicate by performing gestures in sequential patterns. Conventional interaction methods have a one-to-one mapping between movements and commands but new methods such as BrainBraille have instead used a pseudo-binary encoding where multiple body parts are tensed simultaneously. However, non-invasive BCI modalities such as EEG and fNIRS have limited spatial specificity, and have difficulty distinguishing simultaneous movements. We propose a new method using transitions in gesture sequences to combinatorially increase possible commands without simultaneous movements. We demonstrate the efficacy of transitional gestures in a pilot fNIRS study where accuracy increased from 81% to 92% when distinguishing transitions of two movements instead of two movements independently. We calculate ITR for a potential transitional version of BrainBraille, where ITR would increase from 143bpm to 218bpm.

Tan Gemicioglu, R. Michael Winters, Yu-Te Wang, Thomas M. Gable, Ann Paradiso, Ivan J. Tashev

Published in Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, 2023

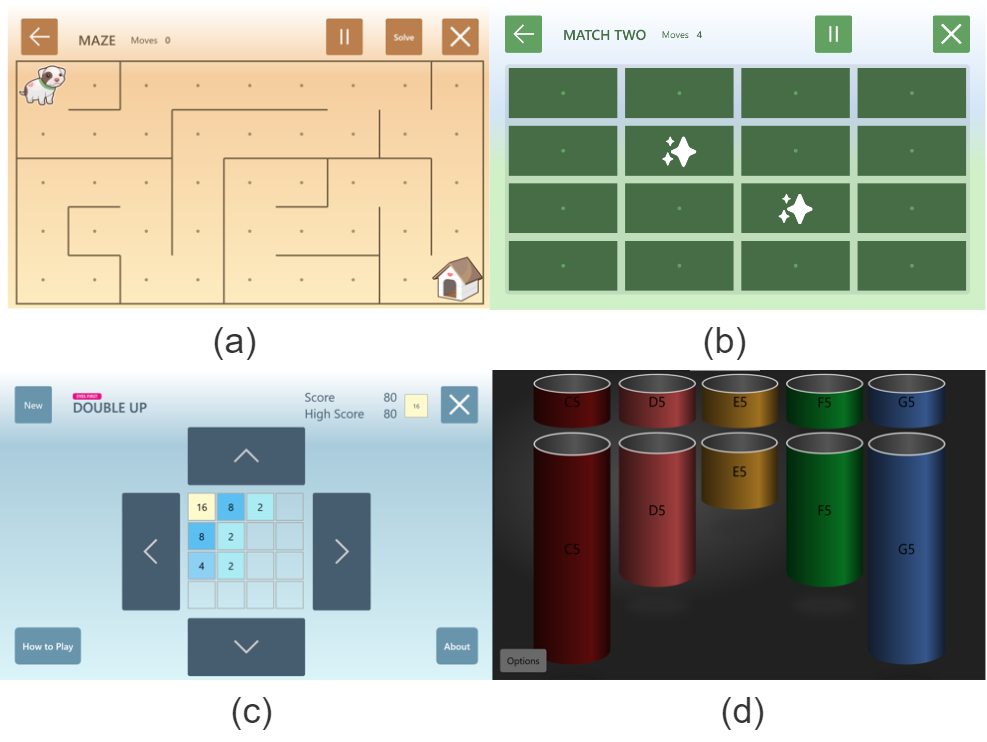

Gaze tracking allows hands-free and voice-free interaction with computers, and has gained more use recently in virtual and augmented reality headsets. However, it traditionally uses dwell time for selection tasks, which suffers from the Midas Touch problem. Tongue gestures are subtle, accessible and can be sensed non-intrusively using an IMU at the back of the ear, PPG and EEG. We demonstrate a novel interaction method combining gaze tracking with tongue gestures for gaze-based selection faster than dwell time and multiple selection options. We showcase its usage as a point-and-click interface in three hands-free games and a musical instrument.

demo gaze, gesture, sensing, subtle-interaction doi paper Best Demo Finalist

Tan Gemicioglu, R. Michael Winters, Yu-Te Wang, Thomas M. Gable, Ivan J. Tashev

Published in Proceedings of the 25th International Conference on Multimodal Interaction, 2023

Mouth-based interfaces are a promising new approach enabling silent, hands-free and eyes-free interaction with wearable devices. However, interfaces sensing mouth movements are traditionally custom-designed and placed near or within the mouth. TongueTap synchronizes multimodal EEG, PPG, IMU, eye tracking and head tracking data from two commercial headsets to facilitate tongue gesture recognition using only off-the-shelf devices on the upper face. We classified eight closed-mouth tongue gestures with 94% accuracy, offering an invisible and inaudible method for discreet control of head-worn devices. Moreover, we found that the IMU alone differentiates eight gestures with 80% accuracy and a subset of four gestures with 92% accuracy. We built a dataset of 48,000 gesture trials across 16 participants, allowing TongueTap to perform user-independent classification. Our findings suggest tongue gestures can be a viable interaction technique for VR/AR headsets and earables without requiring novel hardware.

full-paper gesture, sensing, subtle-interaction doi paper dataset

David Martin, Zikang Leng, Tan Gemicioglu, Jon Womack, Jocelyn Heath, Bill Neubauer, Hyeokhyen Kwon, Thomas Plöetz, Thad Starner

Published in The 25th International ACM SIGACCESS Conference on Computers and Accessibility, 2023

Camera-based text entry using American Sign Language (ASL) fingerspelling has become more feasible due to recent advancements in recognition technology. However, there are numerous situations where camera-based text entry may not be ideal or acceptable. To address this, we present FingerSpeller, a solution that enables camera-free text entry using smart rings. FingerSpeller utilizes accelerometers embedded in five smart rings from TapStrap, a commercially available wearable keyboard, to track finger motion and recognize fingerspelling. A Hidden Markov Model (HMM) based backend with continuous Gaussian modeling facilitates accurate recognition as evaluated in a real-world deployment. In offline isolated word recognition experiments conducted on a 1,164-word dictionary, FingerSpeller achieves an average character accuracy of 91% and word accuracy of 87% across three participants. Furthermore, we demonstrate that the system can be downsized to only two rings while maintaining an accuracy level of approximately 90% compared to the original configuration. This reduction in form factor enhances user comfort and significantly improves the overall usability of the system.

Tan Gemicioglu, Elijah Hopper, Brahmi Dwivedi, Richa Kulkarni, Asha Bhandarkar, Priyanka Rajan, Nathan Eng, Adithya Ramanujam, Charles Ramey, Scott M. Gilliland, Celeste Mason, Caitlyn Seim, Thad Starner

Published in Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Volume 8, Issue 4, 2024

Passive haptic learning (PHL) is a method for training motor skills via intensive repetition of haptic stimuli while a user is focused on other tasks. For the practical application of PHL to music education, we propose passive haptic rehearsal (PHR) where PHL is combined with deliberate active practice. We designed a piano teaching system that includes haptic gloves compatible with daily wear, a Casio keyboard with light-up keys, and an online learning portal that enables users to track performance, choose lessons, and connect with the gloves and keyboard. We conducted a longitudinal two-week study in the wild, where 36 participants with musical experience learned to play two piano songs with and without PHR. For 20 participants with complete and valid data, we found that PHR boosted the learning rate for the matching accuracy by 49.7% but did not have a significant effect on learning the notes' rhythm. Participants across all skill levels in the study require approximately two days less to reach mastery on the songs practiced when using PHR. We also confirmed that PHR boosts recall between active practice sessions. We hope that our results and system will enable the deployment of PHL beyond the laboratory.

full-paper haptics, implicit-interfaces, learning, piano doi paper

Tan Gemicioglu*, Thalia Viranda*, Yiran Zhao*, Olzhas Yessenbayev, Jatin Arora, Jane Wang, Pedro Lopes, Alexander T. Adams, Tanzeem Choudhury

Published in Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Volume 8, Issue 4, 2024

Workplace stress contributes to poor performance and adverse health outcomes, yet current stress management tools often fall short in the fast-paced modern workforce. Guided slow breathing is a promising intervention for stress and anxiety, with peripheral breathing guides being explored for concurrent task use. However, their need for explicit user engagement underscores the need for more seamless, implicit interventions optimized for workplaces. In this mixed-method, controlled study, we examined the feasibility and effects of BreathePulse, a laptop-mounted device that delivers pulsing airflow to the nostrils as an implicit cue, on stress, anxiety, affect, and workload during two levels of a memory (N-Back) task with 23 participants. We found that BreathePulse, the first airflow-only breathing guide, effectively promoted slow breathing, particularly during the easy memory task. Participants' breathing rates aligned with BreathePulse's guidance across tasks, with the longest maintenance of slow breathing – over 40% of the time – during the easy task. Although BreathePulse increased workload and had little impact on stress, it promoted mindfulness, indicating its potential for stress management in the workplace.

full-paper entrainment, implicit-interfaces, respiration doi paper

Stephanie L Cernera*, Tan Gemicioglu*, Julia Berezutskaya, Richard Csaky, Maxime Verwoert, Daniel Polyakov, Sotirios Papadopoulos, Valeria Spagnolo, Juliana Gonzalez-Astudillo, Satyam Kumar, Hussein Alawieh, Dion Kelly, Joanna R G Keough, Araz Minhas, Matthias Dold, Yiyuan Han, Alexander McClanahan, Mousa Mustafa, Juan Jose Gonzalez-Espana, Florencia Garro, Angela Vujic, Kriti Kacker, Christoph Kapeller, Simon H Geukes, Ceci Verbaarschot, Michael Wimmer, Mushfika Sultana, Sara Ahmadi, Christian Herff, Andreea Ioana Sburlea, Camille Jeunet, David E Thompson, Marianna Semprini, Richard A Andersen, Sergey Stavisky, Eli Kinney-Lang, Fabien Lotte, Jordy Thielen, Xing Chen, Victoria Peterson, Aysegul Gunduz, Theresa M Vaughan**, Davide Valeriani**

Published in Journal of Neural Engineering, 2025

The Tenth International Brain-Computer Interface (BCI) Meeting was held June 6-9, 2023 in the Sonian Forest in Brussels, Belgium. At that meeting, 21 master classes, organized by the BCI Society' s Postdoc & Student Committee, supported the Society' s goal of fostering learning opportunities and meaningful interactions for trainees in BCI-related fields. Master classes provide an informal environment where senior researchers can give constructive feedback to the trainee on their chosen and specific pursuit. The topics of the master classes span the whole gamut of BCI research and techniques. These include data acquisition, neural decoding and analysis, invasive and noninvasive stimulation, and ethical and transitional considerations. Additionally, master classes spotlight innovations in BCI research. Herein, we discuss what was presented within the master classes by highlighting each trainee and expert researcher, providing relevant background information and results from each presentation, and summarizing discussion and references for further study.

Tan Gemicioglu, Tanzeem Choudhury

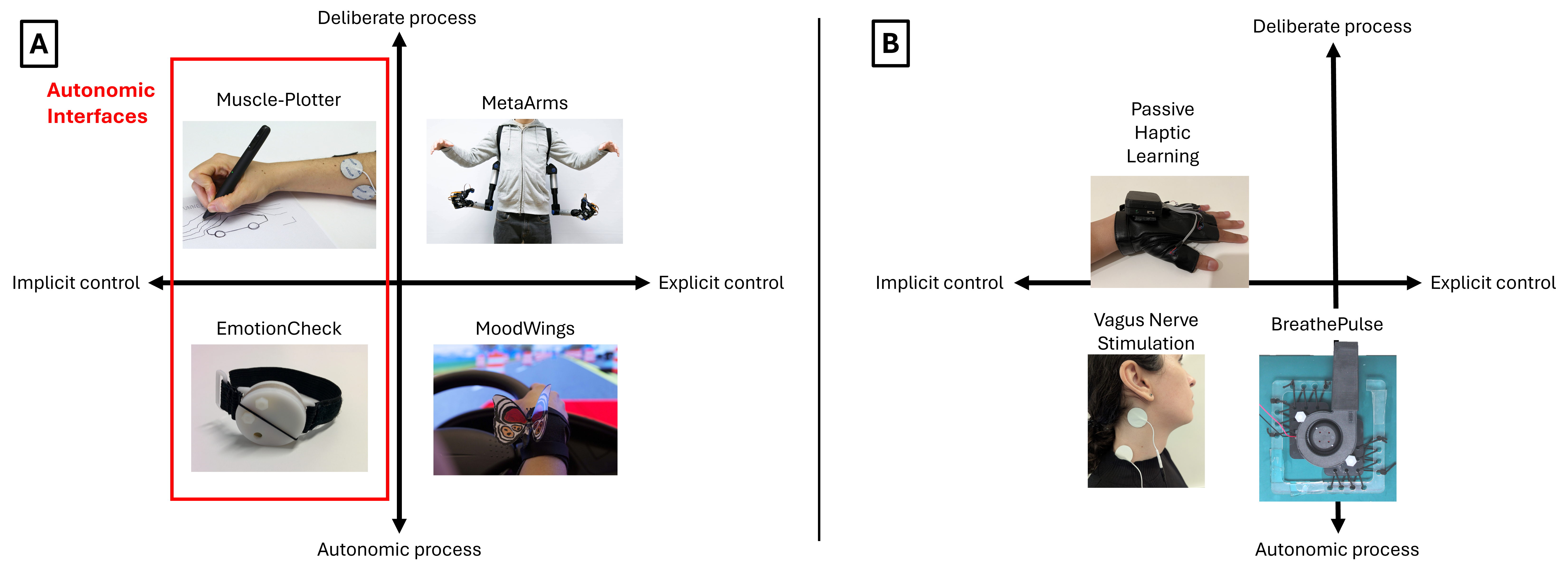

Published in Sensorimotor Devices Workshop at the 2025 CHI Conference on Human Factors in Computing Systems, 2025

Autonomic processes are responsible for maintaining the most vital bodily functions, yet physiological interfaces have had limited success in continuously augmenting these functions. A new wave of wearable and ubiquitous devices have begun to sense and actuate autonomic processes of the human body while requiring little deliberate input from the user. In this position paper, we explore three examples of such devices from our work: An airflow breathing guide, a nerve stimulator for modulating satiety and a haptic glove for implicitly learning piano. The design and experience of these devices provide a unique perspective on control and awareness in interaction. We synthesize common trends for the design of “autonomic interfaces,” sensorimotor devices that augment users’ abilities implicitly by mirroring the mechanisms of autonomic physiological processes.

Yujie Tao, Tan Gemicioglu, Sam Chin, Bingjian Huang, Jas Brooks, Sean Follmer, Pedro Lopes, Suranga Nanayakkara

Published in Adjunct Proceedings of the 38th Annual ACM Symposium on User Interface Software and Technology, 2025

Human senses are fundamental to how we interpret and interact with the world. Computing devices are increasingly coupled with the human sensory system through interfaces such as smart glasses, earbuds, and wristbands. This opens up opportunities to dynamically mediate, modify, and augment perceptual experiences and physiological processes through multisensory stimulation. These devices go beyond assistive technologies designed for individuals with sensory impairments (e.g., hearing aids) and are now available for everyday use. Applications range from enriching immersive entertainment experiences to supporting well-being through multisensory interventions. The UIST community has been a key venue for introducing many proof-of-concept prototypes in multisensory stimulation. However, gaps remain in systematically understanding how such technologies can be designed, studied, and contextualized in long-term, everyday use. This workshop will examine barriers to transitioning prototypes from proof-of-concepts into systems for real-world use. The session will feature keynote talks, demo sessions, and an interactive device-swap activity where participants exchange and wear different devices during the afternoon session, and conclude with an open discussion to develop implementation frameworks.

Kian Mahmoodi*, Yudong Xie*, Tan Gemicioglu*, Chi-Jung Lee, Jiwan Kim, Cheng Zhang

Published in Proceedings of the 2025 ACM International Symposium on Wearable Computers, 2025

Grip force is commonly used as an overall health indicator in older adults and is valuable for tracking progress in physical training and rehabilitation. Existing methods for wearable grip force measurement are cumbersome and user-dependent, making them insufficient for practical, continuous grip force measurement. We introduce EchoForce, a novel wristband using acoustic sensing for low-cost, non-contact measurement of grip force. EchoForce captures acoustic signals reflected from subtle skin deformations by flexor muscles on the forearm. In a user study with 11 participants, EchoForce achieved a fine-tuned user-dependent mean error rate of 9.08% and a user-independent mean error rate of 12.3% using a foundation model. Our system remained accurate between sessions, hand orientations, and users, overcoming a significant limitation of past force sensing systems. EchoForce makes continuous grip force measurement practical, providing an effective tool for health monitoring and novel interaction techniques.

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Undergraduate course, University 1, Department, 2014

This is a description of a teaching experience. You can use markdown like any other post.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.